RPMsg Communication Flow

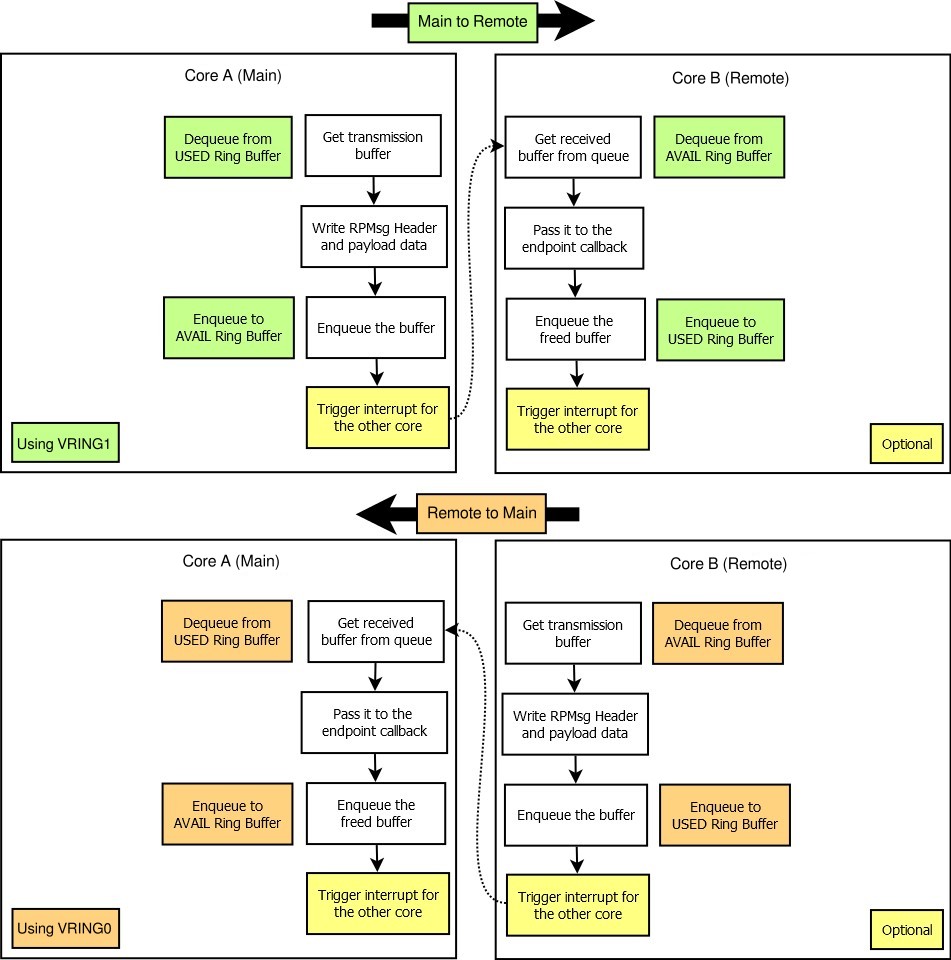

This section details the communication flow for the media access layer of RPMsg Protocol, between the main and the remote processor.

Two VRINGs are used, each with its own available and used ring buffers and associated descriptors, VRING1 and VRING0.

The Virtio RPMsg bus vring allocation, is an example implementation where the main core is running the Linux kernel and where VRING0 is vqs[0] or the receive (rvq) VRING and VRING1 is vqs[1] or the transmit/send (svq) VRING.

Main to Remote RPMsg Flow

For the transmission of RPMsgs from the main core to the remote, the following actions are performed against VRING1, as detailed in the top of the flow chart below:

The main core

Gets a transmission buffer from the used queue of VRING1.

Writes the RPMsg header and payload of this buffer.

Enqueues this buffer containing the RPMsg to the available queue of VRING1 by setting the head index.

Optionally triggers an interrupt to inform remote of message availability (if F_NO_NOTIFY flag is not set in available ring buffer).

The remote then

Gets the received buffer from the available queue of VRING1.

Passes the buffer to the endpoint callback, which would handle the message.

Enqueues the freed buffer to the used queue of VRING1, to make it available again for future transmissions from the main core.

Optionally triggers an interrupt to inform main core of freed buffer (if F_NO_NOTIFY flag is not set in used ring buffer).

Remote to Main RPMsg Flow

For the transmission of RPMsgs from the remote to the main core the following actions are performed on VRING0, as detailed in the lower part of the flow chart above.

The remote

Gets a transmission buffer, provided by main core, from the available queue of VRING0.

Writes the RPMsg header and payload of this buffer.

Enqueues this buffer containing the RPMsg to the used queue of VRING0 by setting the head index.

Optionally triggers an interrupt to inform the main core of message availability (if F_NO_NOTIFY flag is not set in used ring buffer).

The main core then

Gets the received buffer from the used queue of VRING0.

Passes the buffer to the endpoint callback, which would handle the message.

Enqueues the freed buffer to the available queue of VRING0, to make it available again for future transmissions from the remote.

Optionally triggers an interrupt to inform the remote of freed buffer (if F_NO_NOTIFY flag is not set in used ring buffer).

Vring queue and buffer allocation

The remote gets the received RPMsg buffer from the available ring buffer, processes it and then returns it back to the used ring buffer. When the remote is sending a message to the main core, available and used ring buffer roles are swapped.

The reason for swapping the roles of the ring buffers stems from the main core being the buffer provider. The main core is in charge of the shared memory allocation.

When the main core, or buffer provider, does not fill the available ring buffer of VRING0, the remote is unable to send a message to the main core. This can be used to throttle the communication generated by the remote.

It is to be noticed, that the main core always dequeues from the used ring buffer and enqueues to the available ring buffer.

The triggering of interrupts is optional. It is governed by the F_NO_NOTIFY flag in used and available ring buffer flags. The use of notification interrupts is recommended for performance reasons, and defaults to this as the flag is clear. The user can overwrite this by setting this flag if there are particular reasons to poll for messages rather than use interrupts.